AP Syllabus focus:

‘Polling is more precise when it uses accurate sampling and a margin of error, neutrally framed questions, and clear reporting with conclusions supported by the data.’

Polling methodology determines whether public opinion data is trustworthy. AP Gov focuses on how scientific polls are designed, how questions can bias responses, and how results should be reported to support accurate, defensible conclusions.

Scientific polling and sampling

Why sampling matters

A poll becomes “scientific” when it uses a method that gives respondents a known, fair chance of selection and reports uncertainty transparently.

Scientific poll: A survey that uses systematic sampling procedures (often probability-based) and transparent reporting to estimate public opinion in a larger population.

Probability sampling and representativeness

Accurate sampling aims to mirror the target population (e.g., U.S. adults, registered voters, likely voters) so estimates generalise beyond the sample.

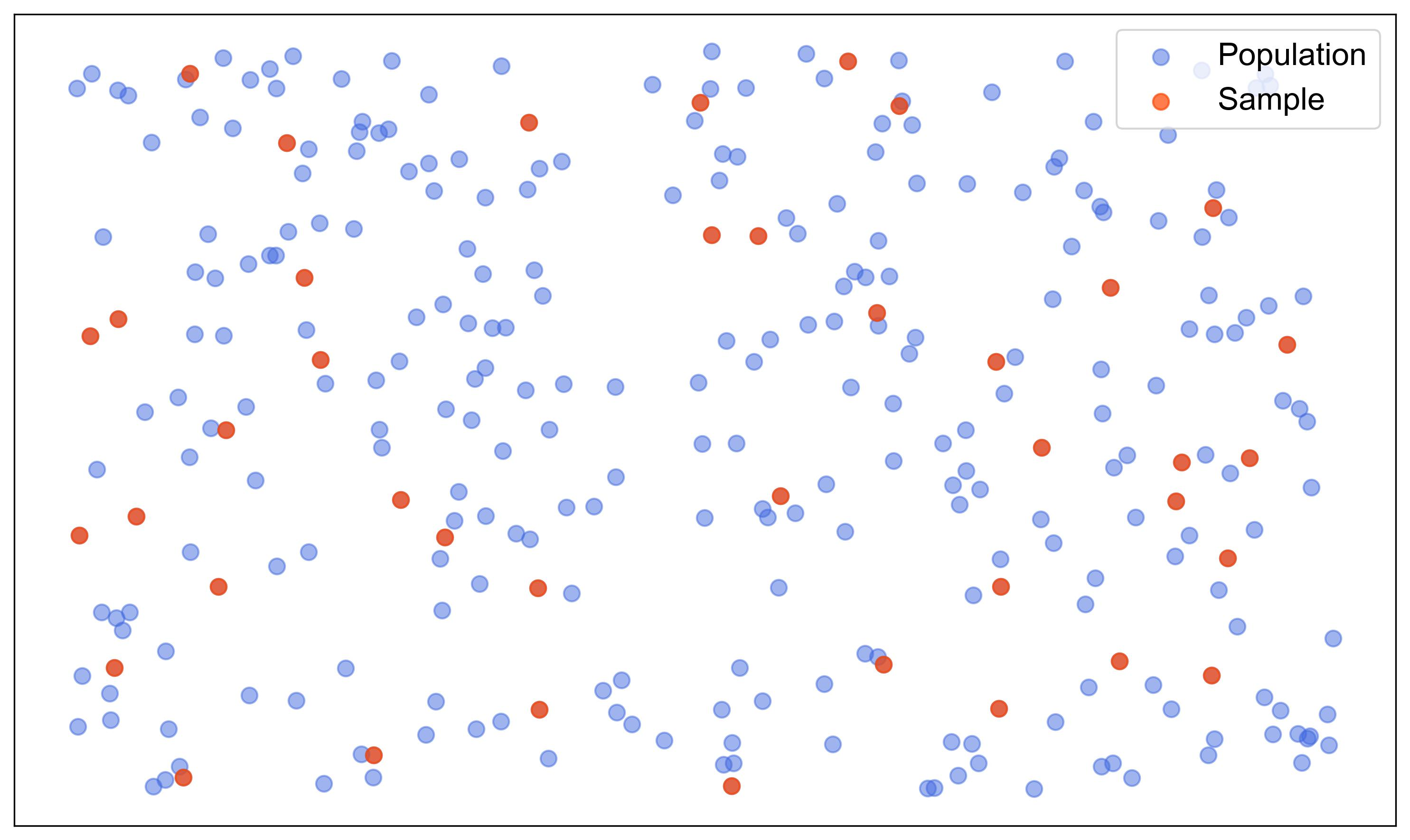

A simple random sampling visualization in which the full population is shown alongside a randomly selected subset (the sample) highlighted in a contrasting color. The figure reinforces that probability-based selection is about giving each unit in the population a fair chance of inclusion, which is foundational for defensible inference from sample to population. Source

Random sampling: selection is based on chance rather than researcher preference

Representative sample: the sample’s key traits (age, race, education, region) resemble the population’s distribution

Common tools:

Random-digit dial (telephone)

Address-based sampling (mail/online recruitment)

Carefully managed online panels (when recruitment and weighting are transparent)

Sources of sampling error and bias

Even with good methods, polls can be distorted by who is reachable and who responds.

Sampling error: natural difference between a sample estimate and the true population value

Selection bias: some groups are systematically more/less likely to be included (e.g., coverage gaps)

Nonresponse bias: selected individuals decline, and nonrespondents differ politically from respondents

Weighting: statistical adjustment to align sample demographics with known benchmarks; helpful, but it cannot fully fix a flawed sample

Margin of error and what it means

Interpreting uncertainty

Polls summarise uncertainty with a margin of error (MOE), which is tied to sample size and statistical confidence. MOE is most meaningful when probability sampling assumptions are approximately met.

Margin of error: A stated range around a poll estimate that reflects expected sampling variation at a given confidence level, assuming a probability-based sample.

A key implication is that larger samples generally reduce expected sampling variation, but MOE does not account for all errors (like biased wording or nonresponse).

EQUATION

= z-score for the chosen confidence level (unitless)

= estimated proportion in the sample (decimal)

= sample size (number of respondents)

Question wording and survey design

Neutral framing vs leading language

The syllabus emphasises neutrally framed questions because wording can shape responses and create misleading “public opinion.”

Avoid leading questions that suggest a preferred answer (“Do you agree that the irresponsible policy should be stopped?”)

Watch for loaded language that adds emotional cues (“welfare” vs “assistance to the poor”)

Limit double-barrelled questions (asking two things at once)

Provide balanced response options and comparable information on both sides

Push poll: A “poll” designed primarily to influence opinions using persuasive or misleading questions rather than to measure them.

Order, context, and response choices

Even neutral wording can be undermined by design choices.

Question order effects: earlier questions prime later answers

Context effects: recent news or prior items change interpretation

Response option design: unbalanced scales or missing options (e.g., no “don’t know”) can force artificial opinions

Clear reporting and defensible conclusions

What credible reporting should include

Clear reporting helps audiences judge quality and prevents overclaiming—conclusions must be supported by the data.

Sponsor and field dates (timing can affect responses)

Target population definition (adults vs registered vs likely voters)

Sampling method and sample size ()

Full question wording and response options

MOE and confidence level (and any subgroup cautions)

Weighting variables and mode (phone, online, mixed)

Common reporting mistakes to avoid

Treating results “within the MOE” as definitive differences

Ignoring methodology and focusing only on headlines

Overgeneralising from unrepresentative samples or small subgroups

Presenting causal claims from descriptive polling (polls measure attitudes; they rarely establish causation)

FAQ

Likely-voter screens use past voting, interest, and intention measures.

Different screens can shift results because turnout is uneven across groups.

Coverage error: some people cannot be reached by the sampling frame.

Nonresponse bias: reachable people decline, and their views differ systematically.

Subgroups have smaller effective sample sizes, raising uncertainty.

Weighting can also amplify variability for small groups.

Unbalanced scales or missing neutral options can “push” choices.

Including “don’t know” can reduce forced or unstable opinions.

At minimum:

population, $n$, field dates

methodology/mode and weighting basics

exact wording

MOE/confidence level and appropriate caveats

Practice Questions

(1–3 marks) Explain why neutrally framed questions are important in scientific polling.

1 mark: Identifies that neutral wording reduces bias/leading effects.

1 mark: Explains that biased wording can change responses and misrepresent true opinion.

1 mark: Links neutrality to more valid conclusions supported by the data.

(4–6 marks) A poll claims Candidate A leads Candidate B based on a survey. Describe two methodological features you would evaluate to judge whether the claim is reliable, and explain how each feature affects the credibility of the conclusion.

1 mark each (2 marks): Identifies two relevant features (e.g., sampling method/representativeness; margin of error; full question wording; nonresponse/weighting; clear reporting of population and dates).

1–2 marks each (2–4 marks): Explains for each feature how it can increase/decrease accuracy (e.g., biased sample distorts estimates; MOE indicates uncertainty; leading wording inflates support; unclear reporting prevents evaluation; weighting may correct imbalances but cannot fully fix bias).