AP Syllabus focus:

‘Enduring Understanding: The t-distribution is key for modeling variation in the context of regression analysis. Learning Objective: Calculate an appropriate test statistic for the slope of a regression model. Essential Knowledge: The test statistic for the slope (b) is calculated as t = (b − β₀)/SE_b, where β₀ is the hypothesized slope value under the null hypothesis, and SE_b is the standard error of the slope. This test statistic follows a t-distribution with n − 2 degrees of freedom, assuming conditions are met and the null hypothesis is true.’

This subsubtopic develops the essential skill of computing a regression slope test statistic, enabling inference about population relationships using sample-based estimates and modeled variation.

Understanding the Purpose of the Test Statistic

The test statistic for a regression slope quantifies how far the sample slope deviates from a hypothesized population slope, relative to the slope’s estimated variability.

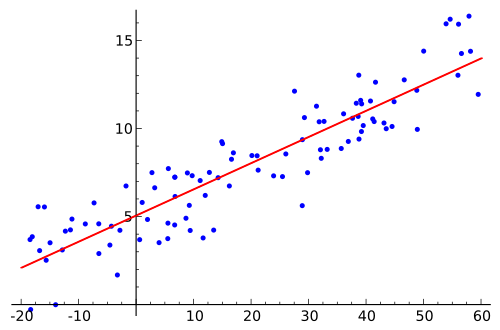

Scatterplot of data points with a fitted linear regression line. The line represents the estimated relationship between the explanatory and response variables, with its slope serving as the point estimate bbb used in the test statistic. This image focuses on the fitted line and random variation around it without adding procedures beyond those required in the syllabus. Source.

This measure allows students to compare observed results against what would be expected if the null hypothesis were true, anchoring slope-based inference in the t-distribution, which appropriately models sampling variation when estimating parameters from sample data.

Before calculating the test statistic, it is important to clarify what the slope represents. In simple linear regression, the population slope β represents the average change in the response variable for each one-unit increase in the explanatory variable. When a research question involves testing a claim about this relationship, the slope becomes the focal point for significance testing.

Slope of a Regression Model (β): The parameter describing the expected change in the mean of the response variable for each one-unit increase in the explanatory variable.

The calculation of the test statistic depends on the comparison between the observed slope and the hypothesized value under the null hypothesis. Students should recall that the sample slope b serves as an unbiased estimate of β, and that its variability is expressed through the standard error of the slope, denoted SE_b.

Components Required for the Test Statistic

Three essential elements are needed to compute the test statistic:

Sample slope (b): Obtained from regression output and used as the point estimate.

Hypothesized slope (β₀): The value specified by the null hypothesis, often 0 when testing for no linear association.

Standard error of the slope (SE_b): Measures variation in slope estimates across repeated samples.

Standard Error of the Slope (SE_b): The estimated standard deviation of sample slope values across repeated random samples of the same size from the population.

These components work together to standardize the difference between b and β₀, expressing it in units of estimated standard deviation. This standardization allows comparison to the t-distribution.

Students must ensure that conditions for regression inference are satisfied. While this subsubtopic does not require verifying conditions in detail, the validity of the resulting t-statistic depends on assumptions such as linearity, independence, constant variability of residuals, and approximate normality of the response distribution for each x-value.

Calculating the Test Statistic Using the t-Distribution

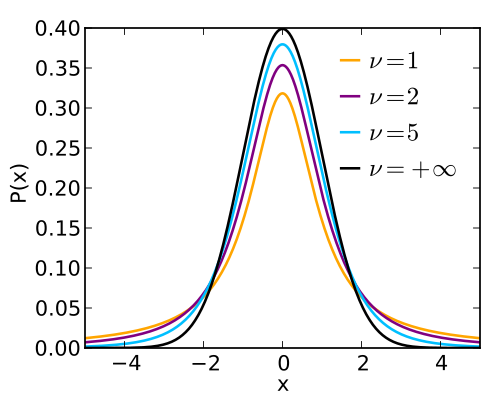

The slope test statistic follows the t-distribution with n − 2 degrees of freedom, reflecting the two parameters (intercept and slope) estimated during regression. The t-distribution is appropriate because the population standard deviation of residuals is unknown and must be estimated from the sample.

EQUATION

= Sample slope from regression

= Hypothesized population slope under the null hypothesis

= Standard error of the slope estimate

This formula computes how many standard errors separate the observed slope from the hypothesized value. A larger absolute t-value indicates stronger evidence against the null hypothesis, as the observed slope appears farther from the hypothesized slope than would typically occur by chance.

After computing the t-statistic, it is interpreted using the t-distribution with n − 2 degrees of freedom, which governs how sample-to-sample variation behaves when estimating a regression slope.

Plot of probability density functions for several Student’s t-distributions with different degrees of freedom. The curves are bell-shaped and centered at zero, emphasizing that the t-statistic for the slope is compared to this family of distributions when making inferences. The image also shows multiple degrees of freedom simultaneously, which goes slightly beyond the syllabus requirement but helps visualize how the t-distribution changes as sample size increases. Source.

This distribution captures uncertainty arising from finite sample sizes and is more spread out than the normal distribution, particularly for smaller n.

Interpreting the Meaning of the Test Statistic

Although this subsubtopic focuses on calculation rather than interpretation, understanding its role within inference strengthens comprehension. The magnitude of the t-statistic reflects how unusual the observed slope would be if the null hypothesis were true. The direction (positive or negative) indicates whether the sample slope is above or below the hypothesized slope.

A complete significance test requires comparing this statistic to critical values or determining a p-value, but those steps belong to later subsubtopics. Here, students must master the computation itself and the reasoning that the statistic quantifies deviation relative to estimated variability.

Process for Computing the Test Statistic

Students can follow a structured process:

Identify the observed slope b from regression output.

Determine the hypothesized slope β₀ from the null hypothesis.

Obtain the standard error SE_b from the regression analysis.

Compute the t-statistic using the formula .

Recognize that the resulting statistic follows a t-distribution with n − 2 degrees of freedom.

Each step contributes to forming a standardized measure that reflects how plausible the observed slope is under the assumption that the null hypothesis is correct.

FAQ

The structure of the test statistic does not change; the hypothesised slope is simply replaced with the specified value.

A non-zero hypothesised slope shifts the benchmark against which the sample slope is compared, meaning a slope close to zero could still produce a large test statistic if the hypothesised value is far from zero.

Two parameters are estimated in simple linear regression: the intercept and the slope. Each estimated parameter reduces the available degrees of freedom.

Using n − 2 accounts for the uncertainty associated with estimating both parameters, giving a more accurate representation of expected sampling variability when assessing the slope.

The test statistic is moderately robust, but certain violations can distort results.

Non-linearity inflates residual error, increasing the standard error and reducing the test statistic.

Heteroscedasticity can underestimate or overestimate the slope’s variability.

Non-independence of observations especially threatens validity, often producing misleading test statistics.

It scales the raw difference between the sample slope and hypothesised slope, converting it into a standardised measure.

A small standard error means slopes across samples tend to be similar, making even small deviations from the hypothesised slope meaningful. A large standard error indicates high variability, so bigger differences are needed to produce a sizeable test statistic.

No. The sign of the test statistic always matches the sign of the difference between the sample slope and hypothesised slope.

A positive test statistic indicates the sample slope is above the hypothesised value; a negative test statistic means it is below. The sign is therefore aligned with the observed direction of association, not altered by the standard error.

Practice Questions

Question 1 (1–3 marks)

A researcher fits a simple linear regression model to investigate whether the amount of weekly exercise predicts resting heart rate. The null hypothesis states that the population slope is 0. The sample slope is -0.42 and the standard error of the slope is 0.18.

Calculate the test statistic for the slope.

Question 1 (1–3 marks)

Correct substitution into the test statistic formula (b − beta0) / SEb:

(-0.42 − 0) / 0.18 (1 mark)Correct numerical value: approximately -2.33 (allow rounding to -2.3 or -2.33) (1 mark)

Indication that this is the test statistic for assessing the slope (1 mark)

Maximum: 3 marks

Question 2 (4–6 marks)

A scientist studies whether the concentration of a chemical in water (in mg/L) is associated with the growth rate of a plant species. A simple linear regression is performed. The hypothesised population slope under the null hypothesis is 0. The sample slope is 1.37, and the standard error of the slope is 0.44.

(a) Calculate the test statistic for the slope.

(b) Explain what the value of this test statistic indicates about the sample slope in relation to the null hypothesis.

(c) State the distribution, including its degrees of freedom, that would be used to determine the p-value for this test statistic.

Question 2 (4–6 marks)

(a)

Correct substitution: (1.37 − 0) / 0.44 (1 mark)

Correct numerical value: approximately 3.11 (allow rounding to 3.1 or 3.11) (1 mark)

(b)

Explanation that a large positive test statistic indicates the observed sample slope is several standard errors above the hypothesised slope (1 mark)

Statement that this suggests the slope is unlikely to be 0 if the test statistic is large relative to the t-distribution (1 mark)

(c)

Correct distribution named: t-distribution (1 mark)

Correct degrees of freedom stated: n − 2 (1 mark)

Maximum: 6 marks