AP Syllabus focus:

‘Detailed examination of voluntary response bias, occurring when samples consist of volunteers or self-selected participants, leading to non-representative samples of the population. Discussion will include examples and strategies to mitigate this form of bias in data collection.’

Voluntary response bias arises when individuals choose whether to participate, causing strongly opinionated or atypical responders to dominate results and undermining the reliability of statistical conclusions.

Voluntary Response Bias

Voluntary response bias is a specific form of sampling bias that occurs when participants in a study self-select into the sample instead of being chosen through a random process. Because those who choose to respond often differ systematically from those who do not, the resulting sample is unlikely to represent the population accurately.

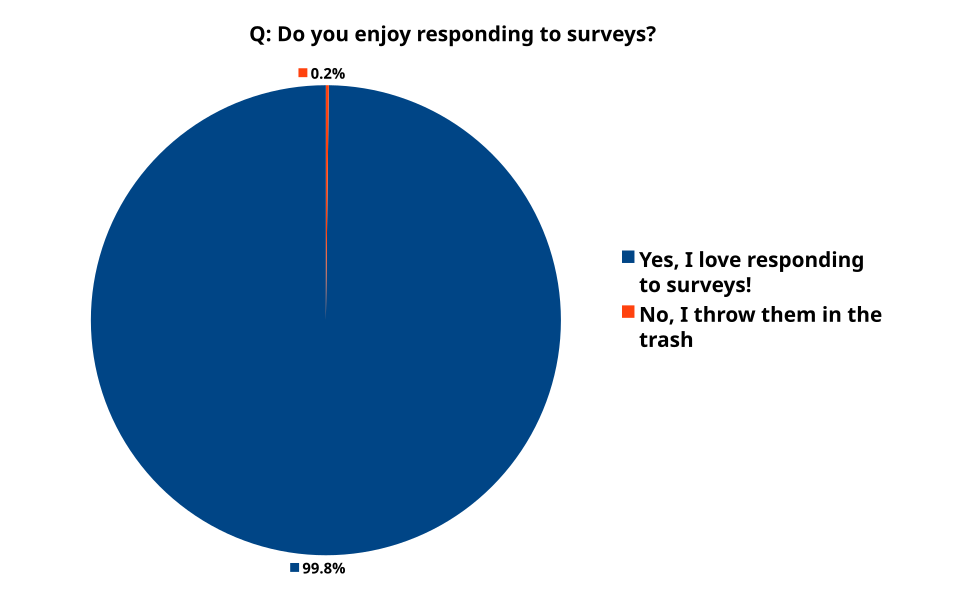

Pie chart showing how only people who enjoy responding to surveys choose to participate, illustrating how voluntary response samples can heavily overrepresent strongly motivated responders. Source.

When discussing voluntary response bias, it is crucial to recognize that self-selection is the defining feature.

Self-Selected Sample: A sample in which participants choose for themselves whether to be included, rather than being selected by the researcher using a random method.

When individuals self-select, their decision to participate is often influenced by strong feelings, convenience, or personal characteristics. These systematic differences distort the truthfulness of the data, making voluntary response bias particularly problematic in surveys, online polls, and feedback-based studies.

Why Voluntary Response Bias Occurs

Voluntary response bias emerges because people with certain characteristics are more motivated to respond than others. Common contributors include:

Strong opinions or emotions, often extreme, prompting participation.

Personal relevance, making individuals with a stake in the outcome more likely to volunteer.

Convenience, where responding is easy and low-cost for specific groups but not for others.

Awareness or access, where only those who encounter the survey invitation can respond.

These factors create a systematic difference between responders and non-responders, violating the principle that samples must reflect the entire population.

Characteristics of Voluntary Response Samples

Voluntary response samples share identifiable features that distinguish them from properly randomized samples. These characteristics include:

Non-random participation, meaning chance plays no role in selection.

Overrepresentation of motivated individuals, often with extreme views or unusual experiences.

Underrepresentation of the indifferent, busy, or less informed, who may be equally or more typical of the population.

High variability, because extreme opinions tend to inflate the spread of responses.

Each of these characteristics undermines the goal of collecting data that accurately mirrors population patterns.

Why Voluntary Response Bias Threatens Validity

Voluntary response bias compromises both internal validity (truthfulness of conclusions about the sample) and external validity (generalizability to the population). The threat arises because the sample:

Does not approximate the population distribution.

Fails to meet the assumptions required for legitimate inferences.

Reflects participant motivation rather than population characteristics.

When a dataset suffers from voluntary response bias, measures of center such as the mean or proportion become unreliable indicators of true population parameters. The researcher may draw conclusions that favor the interests, perspectives, or behaviors of only a subset of individuals.

Distinguishing Voluntary Response Bias from Other Biases

While voluntary response bias is a form of sampling bias, it differs from others in notable ways:

Undercoverage bias excludes part of the population due to sampling methodology, whereas voluntary response bias stems from self-selection, not methodological exclusion.

Nonresponse bias occurs when selected participants fail to respond; voluntary response bias arises when participants were never selected in the first place but choose to participate.

Response bias addresses inaccurate answers, whereas voluntary response bias concerns who participates, not how they respond.

Recognizing these distinctions helps prevent conflating sources of error and ensures the proper corrective strategies are applied.

Strategies to Reduce Voluntary Response Bias

Researchers can minimize voluntary response bias using intentional and structured data collection strategies. Key approaches include:

Using Random Sampling

Random sampling incorporates chance to give all population members an equal opportunity to be selected. This approach prevents participants from choosing themselves, thereby reducing systematic differences.

Using random sampling methods instead of open volunteer calls helps ensure that each individual in the population has a known, nonzero chance of being selected.

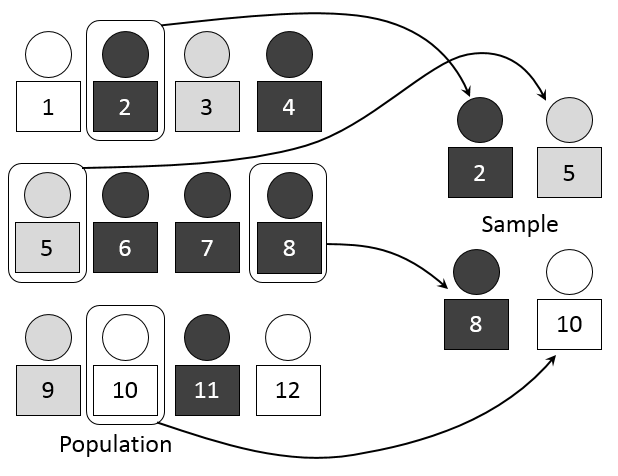

Diagram illustrating a simple random sample where each member of the population has a chance to be chosen, contrasting with voluntary response sampling where only self-selected individuals appear. Source.

Employing Follow-Up Procedures

When surveys require participation, researchers can use follow-up messages, phone calls, or reminders to ensure that individuals who might not volunteer initially are still included, promoting greater representativeness.

Limiting Open Calls for Participation

Avoiding broad public invitations for participation, especially for surveys or studies likely to attract extreme responders, reduces the risk of obtaining a skewed sample.

Ensuring Accessibility for All Population Members

Making surveys available across multiple platforms and formats prevents convenience from determining who responds.

Implications for AP Statistics

Understanding voluntary response bias is essential for evaluating study designs and identifying weaknesses in data collection. Students must be able to:

Identify voluntary response bias in descriptions of studies.

Explain how self-selection leads to systematic errors.

Discuss why conclusions drawn from voluntary response samples are unreliable.

Propose strategies that incorporate randomness to enhance representativeness.

These skills form a critical part of interpreting data validity and designing trustworthy statistical studies within the AP Statistics framework.

FAQ

People may respond because the topic feels personally relevant, even if they do not hold extreme views. Convenience also plays a role; individuals who frequently check email or social media may encounter survey links more often.

External motivators, such as wanting to support a friend or organisation conducting the survey, can further influence participation. These influences still lead to imbalance because they are not evenly distributed across the population.

If the participants who self-select share common characteristics beyond their opinions, they may create the illusion of a relationship between variables that does not exist in the full population.

For example, if mostly older individuals volunteer for a health survey, any patterns relating health outcomes to age may be exaggerated. This distortion makes it difficult to interpret genuine associations.

A larger sample size does not resolve voluntary response bias because the issue lies in who chooses to participate, not how many respond.

Even thousands of responses can be unrepresentative if responders differ systematically from non-responders. Bias is a structural problem, not a matter of quantity.

Online polls are usually open-access, meaning anyone who encounters them can respond, and individuals with stronger reactions are more likely to do so.

They also rely on self-selection through digital platforms, where algorithms may disproportionately show content to certain groups, amplifying imbalances further.

Researchers can compare characteristics of respondents to known characteristics of the target population, such as age distributions or geographic patterns.

If response patterns deviate substantially, this suggests the presence of voluntary response bias.

Possible indicators include unusually extreme responses, clustering within specific demographic groups, and very low participation rates.

Practice Questions

Question 1 (1–3 marks)

A newspaper invites readers to call a phone number to voice their opinion on a proposed increase in public transport fares. Explain why the results of this survey are likely to suffer from voluntary response bias.

Question 1

• 1 mark: States that participants choose whether to respond.

• 1 mark: Explains that people with strong opinions are more likely to participate.

• 1 mark: Notes that the results will not represent the entire readership/population.

Total: 3 marks

Question 2 (4–6 marks)

A school posts an online survey asking students to rate the quality of the cafeteria food. Only 12% of the student body chooses to respond, and most responses are strongly negative.

(a) Identify the type of bias present and explain how it arises in this scenario.

(b) Discuss two reasons why the results may not accurately reflect the views of the entire student population.

(c) Describe one method the school could use to reduce the bias in future surveys.

Question 2

(a)

• 1 mark: Identifies voluntary response bias.

• 1 mark: Explains that students self-select into the survey.

• 1 mark: States that those with strong negative opinions are more likely to respond.

(b)

• 1 mark: Mentions that non-respondents may have different views from respondents.

• 1 mark: Notes that the low response rate limits representativeness.

• 1 mark: Explains that students with neutral or positive views may not have taken part.

(c)

• 1 mark: Suggests a suitable improvement (e.g., random sampling, encouraging higher participation, offering follow-ups).

• 1 mark: Explains how the suggested method would reduce the bias.

Total: 6 marks